The impact of research can be estimated by how often it is cited in subsequent publications. This technique is known as Bibliometrics - the quantitative analysis of scholarly communication.

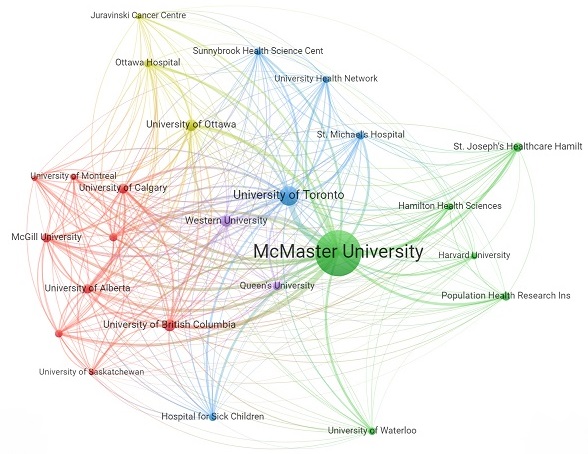

When aggregated at the level of departments, universities, and countries, patterns within this metadata can provide strategic insights into the evolution of an academic field (“Scientometrics”).

McMaster Library provides instruction on Bibliometric databases, the strategic analysis of research opportunities, and assistance with managing researcher profiles. In-depth collaboration on research projects (as a co-author) is welcomed.

Beyond simply counting citations, Bibliometrics is the space for exploring how ideas flow.

McMaster Library subscribes to a range of databases that index the academic literature:

- Web of Science & InCites

- Scopus

- Dimensions

- Altmetric Explorer

In addition, various free open source databases are available:

- OpenAlex

- Wizdom.ai

- The LENS

At the cutting edge of information science, new ways of exploring citation networks are appearing. While some features are self-explanatory, many of the concepts are both subtle and complex. Do not hesitate to ask for help:

Citation networks:

Artificial Intelligence boosted searching:

Algorithms that leverage citation patterns:

Bibliometrics can provide strategic insights into the evolution of an academic field. Individual and group consultations are available for help finding and analyzing Bibliometric metadata. Training is available on the databases that the Library subscribes to:

- Web of Science & InCites

- Scopus

- Dimensions

- Altmetric Explorer

Get recognized for your research output:

- Academic workflows are now almost entirely digital.

- This enables a fluid exchange of articles, datasets, and code.

- Keeping track of who is sharing what online relies on persistent identifiers (PIDs). This ensures that the researcher gets credit for their work.

- Documents have DOIs

- People have ORCIDs

- The ORCID protocol allows systems to exchange metadata directly, which relieves much of the administrative burden.

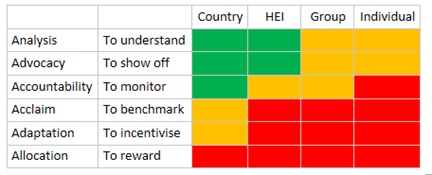

Impact metrics can be misleading if used carelessly!

The ease with which bibliometric data can be used to rank people and institutions has led to harmful decision making. While numbers allow for useful comparisons in a well-defined context, the quality of a researcher cannot be reduced to a single dimension. The h-index is particularly harmful in this respect. Bibliometric indicators provide insights that are complementary to - BUT DO NOT REPLACE - qualitative peer review: The best way to evaluate a research article is to read it. Please consult us if the purpose of your analysis falls into the yellow or red categories:

The academic sector has developed principles for the responsible use of metrics:

- DORA [The San Francisco Declaration on Responsible Assessment]

- Leiden Manifesto

- 10 principles for the responsible use of university rankings [CWTS Leiden]

- More Than Our Rank

McMaster Experts generates up-to-date reports of the publications produced by a department.

The accuracy of these reports is dependent on the maintenance of researcher profiles. Training is available for faculty on how to curate their profile.